Business

Leading AI Expert Leaves Field to Pursue Poetry Amidst Growing Concerns

An OpenAI researcher’s resignation has been reported this week, citing concerns over the company’s recent move to initiate trials of advertisements within ChatGPT.

A researcher specializing in AI safety has left his position at Anthropic, a US-based company, with a parting warning that the global situation is precarious.

Mrinank Sharma's resignation letter, posted on X, cited concerns regarding AI, bioweapons, and the broader state of the world as reasons for his departure from the firm.

Sharma intends to shift his focus to creative pursuits, including writing and studying poetry, and plans to relocate to the UK, where he aims to maintain a lower profile.

This development coincides with the resignation of an OpenAI researcher, who expressed concerns about the company's decision to introduce advertisements in its ChatGPT platform.

Anthropic, the developer of the Claude chatbot, had recently released a series of commercials criticizing OpenAI's move to include ads for certain users.

Founded in 2021 by a group of former OpenAI employees, Anthropic has positioned itself as a leader in safety-oriented AI research, distinguishing itself from its competitors.

During his tenure at Anthropic, Sharma led a team focused on developing safeguards for AI systems.

In his resignation letter, Sharma highlighted his contributions to the company, including research on the potential risks of AI-assisted bioterrorism and the impact of AI assistants on human behavior.

Despite his positive experience at Anthropic, Sharma felt that it was time for him to move on, citing a sense of unease about the company's priorities.

In a statement, Sharma warned that the world faces numerous interconnected crises, posing threats beyond just AI and bioweapons.

He noted that despite the company's values, he had witnessed the challenges of prioritizing them in the face of competing pressures, a struggle he believed was not unique to Anthropic.

Sharma announced his plans to pursue a degree in poetry and focus on writing, marking a significant departure from his previous work in AI research.

In a subsequent response, Sharma mentioned that he would be relocating to the UK and taking a step back from public life for a while.

It is not uncommon for individuals leaving prominent AI firms to do so with substantial financial compensation and benefits, having been attracted to these companies by lucrative salary packages.

Anthropic describes itself as a public benefit corporation committed to harnessing the benefits of AI while mitigating its risks.

The company has specifically focused on addressing the potential dangers posed by advanced AI systems, including the risk of these systems becoming misaligned with human values or being exploited for malicious purposes.

Anthropic has released reports on the safety of its products, including an incident in which its technology was compromised by hackers to carry out sophisticated cyber attacks.

However, the company has faced criticism for its practices, including a $1.5 billion settlement in 2025 to resolve a class-action lawsuit filed by authors who claimed that Anthropic had used their work without permission to train its AI models.

Like OpenAI, Anthropic seeks to capitalize on the benefits of AI technology, including through the development of its own AI products, such as the Claude chatbot.

The company recently released an advertisement criticizing OpenAI's decision to introduce ads in its ChatGPT platform.

OpenAI CEO Sam Altman had previously expressed his dislike for ads, stating that he would only consider using them as a last resort.

In response to Anthropic's criticism, Altman defended OpenAI's decision, but his lengthy statement was met with ridicule.

In an opinion piece published in the New York Times, former OpenAI researcher Zoe Hiztig expressed her deep concerns about the company's strategy, particularly with regards to advertising.

Hiztig noted that users often share sensitive information with chatbots, including medical concerns, personal problems, and beliefs, which could be exploited by advertisers.

She warned that the use of this sensitive information for advertising purposes could have unintended consequences, including the potential for manipulating users in ways that are not yet fully understood.

Hiztig expressed fears that OpenAI's approach to advertising may already be undermining the company's core principles, which prioritize the well-being of humanity.

She cautioned that if OpenAI's advertising strategy does not align with its values, it may accelerate the erosion of these principles.

BBC News has reached out to OpenAI for a response to these concerns.

To stay up-to-date on the latest tech news and trends, sign up for our Tech Decoded newsletter; for readers outside the UK, please use this link.

Business

Artist Alleges AirAsia Used His Work Without Permission

A street artist from Penang has reported that one of his artworks has been replicated and featured on the design of an airplane.

A lawsuit has been filed by a Malaysian-based artist against AirAsia and its parent company, Capital A Berhad, alleging unauthorized use of the artist's designs on one of the airline's planes.

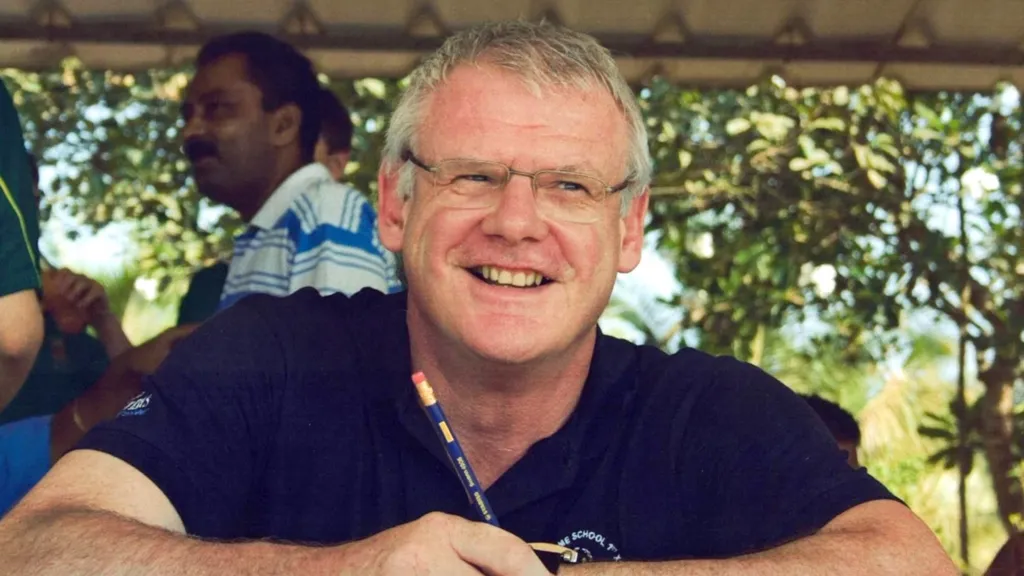

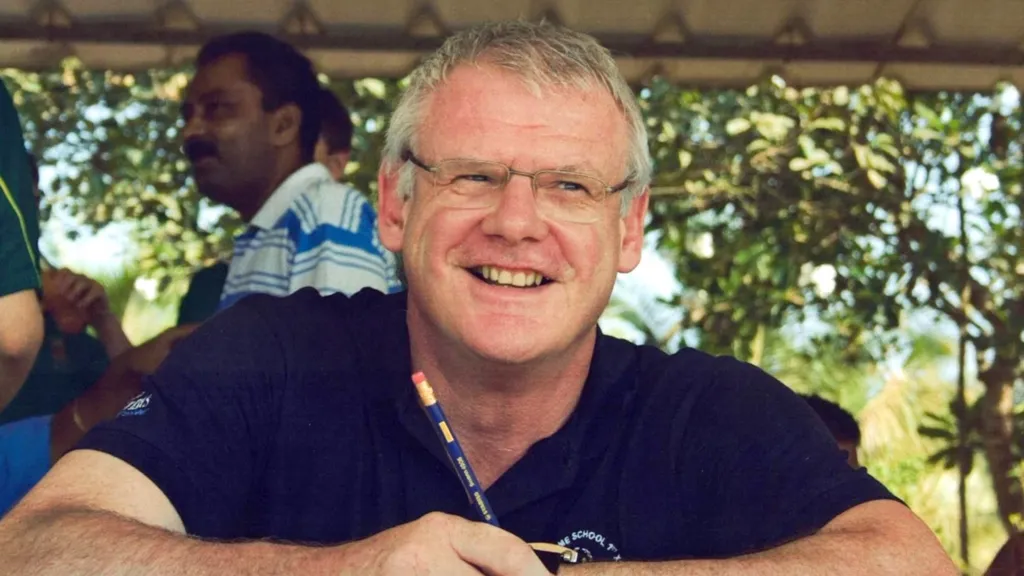

According to the lawsuit, Ernest Zacharevic, a Lithuanian-born artist residing in Penang, claims that his 2012 street mural, Kids on Bicycle, was reproduced and displayed on an AirAsia aircraft in late 2024 without his permission.

Zacharevic states that the use of his design was unauthorized, and no licensing agreement or consent was obtained, adding that the livery was removed after he publicly expressed concerns about the matter.

The BBC has reached out to AirAsia for a statement regarding the allegations.

In an interview with the BBC on Thursday, Zacharevic recalled that he first became aware of the alleged copyright infringement in October 2024, when he discovered that an AirAsia plane was featuring a livery resembling his artwork.

With over a decade of experience in Malaysia, Zacharevic is known for his roadside murals in Penang, which have become a staple of the local art scene.

One of Zacharevic's notable works is the 2012 street mural Kids on Bicycle, created for a local festival, which features two children on a bicycle integrated into the mural, located in George Town's heritage district.

The mural has become a popular tourist attraction, with many visitors taking photos in front of the iconic artwork.

Zacharevic alleges that his work was reproduced on an AirAsia plane without his knowledge or consent, and he personally witnessed the plane in operation at an airport.

Recalling the incident, Zacharevic expressed his discomfort with the situation, which occurred in 2024.

He took to social media to address the issue, posting a photo of the plane and tagging the airline, suggesting that they needed to discuss the use of his artwork.

Since then, Zacharevic has engaged in discussions with the company, but they have been unable to come to a mutually agreeable resolution.

This is not the first instance of Zacharevic's work being used in connection with AirAsia, as he claims the airline has also used his artwork on a delivery bag for its food services arm.

Court documents reveal that Zacharevic had previously discussed a potential collaboration with AirAsia in 2017, where he would create art for the airline's jets and a mural in one of their offices.

According to the documents, Zacharevic had informed the airline of his work and business rates during these discussions.

The lawsuit asserts that despite being aware of Zacharevic's work and rates, the airline proceeded to reproduce and publicly display one of his notable works, thereby infringing on his copyright and moral rights.

As the largest low-cost carrier in Asia, AirAsia operates over 200 jets to more than 100 destinations, and has recently announced plans to resume flights from Kuala Lumpur to London via Bahrain.

Zacharevic has stated that he will leave it to the court to determine any potential compensation he may be entitled to.

The artist emphasized that he does not consider the use of his artwork to be a mere reference to cultural or geographical associations, but rather a distinct artistic creation.

Zacharevic stressed that his artwork is the result of years of professional training, skill, and labor, and should be recognized as such.

Business

US Businesses and Consumers Bear Brunt of Trump Tariff Costs, According to NY Fed

In 2022, the United States saw a significant increase in collective import tariff rates, with rates rising by more than 300 percent for a range of imported goods.

The modification of tariff agreements by President Donald Trump with several countries had a consistent outcome: increased costs for US-based companies and consumers.

According to a study released on Thursday by the Federal Reserve Bank of New York, the average tariff rate on imported goods increased significantly, rising from 2.6% at the beginning of the year to 13% in 2025.

The New York Fed's research revealed that US companies absorbed approximately 90% of the costs associated with the higher tariffs imposed by Trump on goods from countries such as Mexico, China, Canada, and the European Union.

The Federal Reserve Bank of New York stated that "the majority of the economic burden of the high tariffs imposed in 2025 continues to be borne by US firms and consumers."

When tariff rates changed and increased in the previous year, exporting countries did not adjust their prices to mitigate potential declines in US demand.

Instead of lowering prices, exporters maintained their existing prices and transferred the tariff costs to US importers, who subsequently increased the prices of these goods for consumers.

The response of exporters in 2025 was similar to their reaction in 2018, when Trump introduced certain tariffs during his first term, resulting in higher consumer prices with minimal other economic effects, as noted by the New York Fed at the time.

The New York Fed's findings on Thursday are consistent with the results of other recent analyses.

The Kiel Institute for the World Economy, a German research organization, reported last month that its research indicated "nearly complete pass-through of tariffs to US import prices."

By analyzing 25 million transactions, Kiel researchers discovered that the prices of goods from countries like Brazil and India did not decrease.

The Kiel report noted that "trade volumes declined" instead, indicating that exporters preferred to reduce the quantity of goods shipped to the US rather than lower their prices.

The National Bureau of Economic Research also found that the pass-through of tariffs to US import prices was "nearly 100%", meaning that the US bears the cost of the price increase, not the exporting countries.

Similarly, the Tax Foundation, a Washington DC-based think tank, found that the increased tariffs on goods in 2025 resulted in higher costs for American households.

The Tax Foundation considered tariffs as a new tax on consumers and estimated that the 2025 increases resulted in an average cost of $1,000 (£734.30) per household, with a projected cost of $1,300 in 2026.

The Tax Foundation reported that the "effective" tariff rate, which accounts for decreased consumer purchases due to higher prices, is now 9.9%, representing the highest average rate since 1946.

The Tax Foundation concluded that the economic benefits of tax cuts included in Trump's "Big Beautiful Bill" will be entirely offset by the impacts of the tariffs on households.

Business

BBC Reporter Exposed to Cyber Attack Due to Vulnerabilities in AI Coding Tool

The demand for vibe-coding tools, which enable individuals without coding experience to develop applications using artificial intelligence, is experiencing rapid growth.

A significant and unresolved cyber-security vulnerability has been identified in a popular AI coding platform, according to information provided to the BBC.

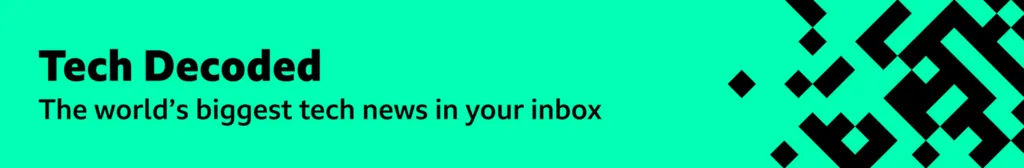

The platform, known as Orchids, utilizes a "vibe-coding" approach, enabling individuals without technical expertise to create apps and games by inputting text prompts into a chatbot.

In recent months, such platforms have gained widespread popularity, often being touted as an early example of how AI can rapidly and affordably perform various professional tasks.

However, experts warn that the ease with which Orchids can be compromised highlights the risks associated with granting AI bots extensive access to computers in exchange for autonomous task execution.

Despite repeated requests for comment, the company has not responded to the BBC's inquiries.

Orchids claims to have a user base of one million and boasts partnerships with top companies, including Google, Uber, and Amazon.

According to ratings from App Bench and other analysts, Orchids is considered the top program for certain aspects of vibe coding.

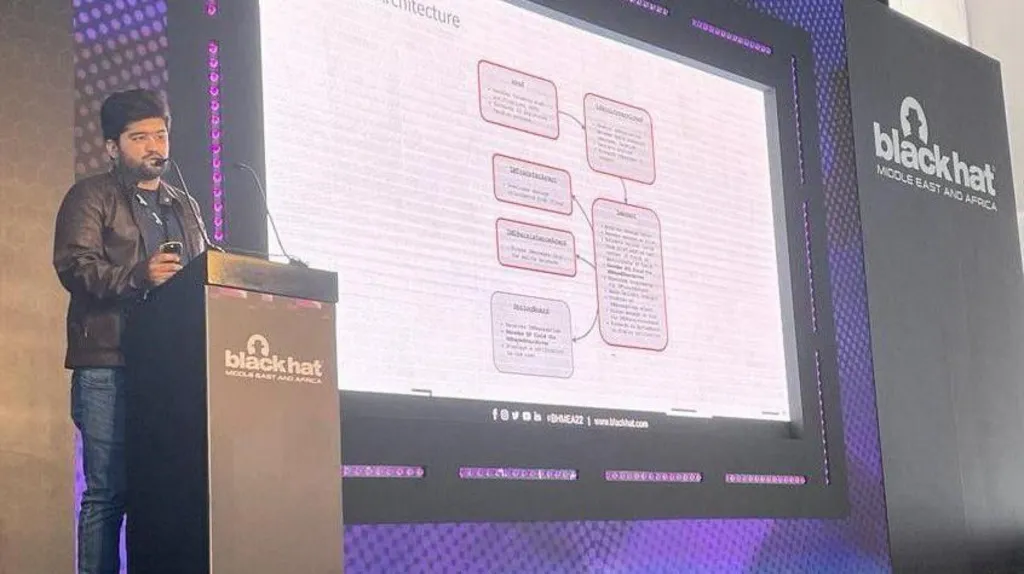

Cyber-security researcher Etizaz Mohsin demonstrated the platform's security flaws to the BBC.

To test the platform's security, a spare laptop was used to download the Orchids desktop app and initiate a vibe-coding project.

A request was made to the Orchids AI assistant to generate code for a computer game based on the BBC News website.

The AI assistant automatically compiled code on the screen, which, without prior experience, was unintelligible.

By exploiting a specific cyber-security weakness, Mohsin was able to access the project and view and edit the code.

Mohsin then added a line of code to the project, which went unnoticed.

This action apparently allowed him to gain access to the computer, as evidenced by the subsequent appearance of a "Joe is hacked" notepad file on the desktop and a changed wallpaper featuring an AI hacker image.

The potential implications of this hack on the platform's numerous projects are significant.

A malicious hacker could have easily installed a virus on the machine without any action required from the victim.

Sensitive personal or financial data could have been compromised.

An attacker could have accessed internet history or even used the computer's cameras and microphones for surveillance.

Most cyber-attacks involve tricking victims into downloading malicious software or divulging login credentials.

This particular attack was carried out without any involvement from the victim, known as a zero-click attack.

Mohsin stated that the vibe-coding revolution has introduced a new class of security vulnerabilities that did not previously exist, highlighting the risks associated with relying on AI to handle tasks.

The concept of AI handling tasks autonomously comes with significant risks, according to Mohsin.

Mohsin, a 32-year-old from Pakistan currently residing in the UK, has a history of discovering dangerous software flaws, including work on the Pegasus spyware.

Mohsin discovered the flaw in December 2025 while experimenting with vibe-coding and has since attempted to contact Orchids through various channels, sending around a dozen messages.

The Orchids team responded to Mohsin this week, stating that they may have missed his warnings due to being overwhelmed with incoming messages.

According to the company's LinkedIn page, Orchids is a San Francisco-based company founded in 2025 with fewer than 10 employees.

Mohsin has only identified flaws in Orchids and not in other vibe-coding platforms, such as Claude Code, Cursor, Windsurf, and Lovable.

Nonetheless, experts caution that this discovery should serve as a warning.

Professor Kevin Curran of Ulster University's cybersecurity department notes that the main security implications of vibe-coding are the potential for code to fail under attack due to a lack of discipline, documentation, and review.

Agentic AI tools, which perform complex tasks with minimal human input, are increasingly gaining attention.

A recent example is the Clawbot agent, also known as Moltbot or Open Claw, which can execute tasks on a user's device with little human intervention.

The free AI agent has been downloaded by hundreds of thousands of people, granting it deep access to computers and potentially introducing numerous security risks.

Karolis Arbaciauskas, head of product at NordPass, advises caution when using such tools.

Arbaciauskas warns that while it may be intriguing to see what an AI agent can do without security measures, this level of access is also highly insecure.

He recommends running these tools on separate, dedicated machines and using disposable accounts for experimentation.

To stay informed about the latest tech stories and trends, sign up for the Tech Decoded newsletter, available outside the UK as well.

(Removed to maintain the same number of paragraphs)

-

News10 hours ago

News10 hours agoAustralian Politics Faces Questions Over Gender Equality Amid Sussan Ley’s Appointment

-

News7 hours ago

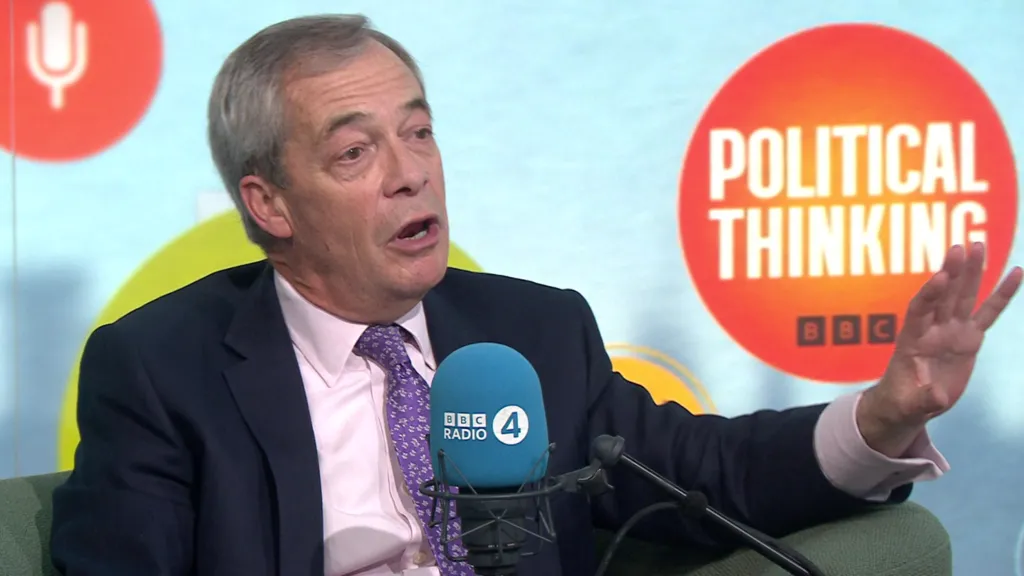

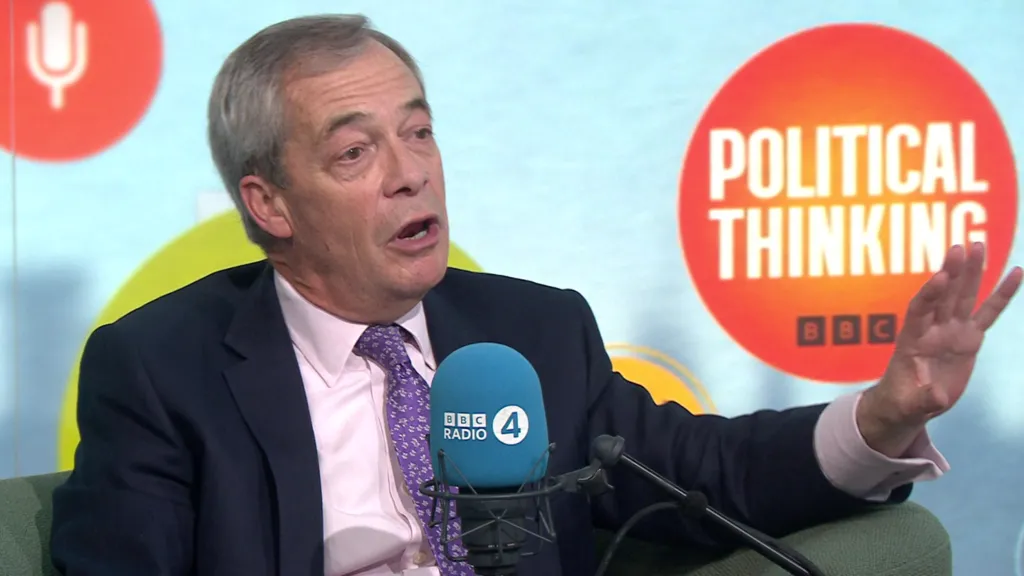

News7 hours agoFarage Says Reform to Replace Traditional Tory Party

-

News7 hours ago

News7 hours agoWrexham Pair Seek Win Against Former Team Ipswich

-

News12 hours ago

News12 hours agoLiberal Party Removes Australia’s First Female Leader

-

News10 hours ago

News10 hours agoUK Braces for Cold Snap with Snow and Ice Alerts Expected

-

News7 hours ago

News7 hours agoHusband’s alleged £600k theft for sex and antiques blamed on drug side effects

-

News2 days ago

News2 days agoSunbed ads spreading harmful misinformation to young people

-

Business12 hours ago

Business12 hours agoBBC Reporter Exposed to Cyber Attack Due to Vulnerabilities in AI Coding Tool